-

Extreme Perception

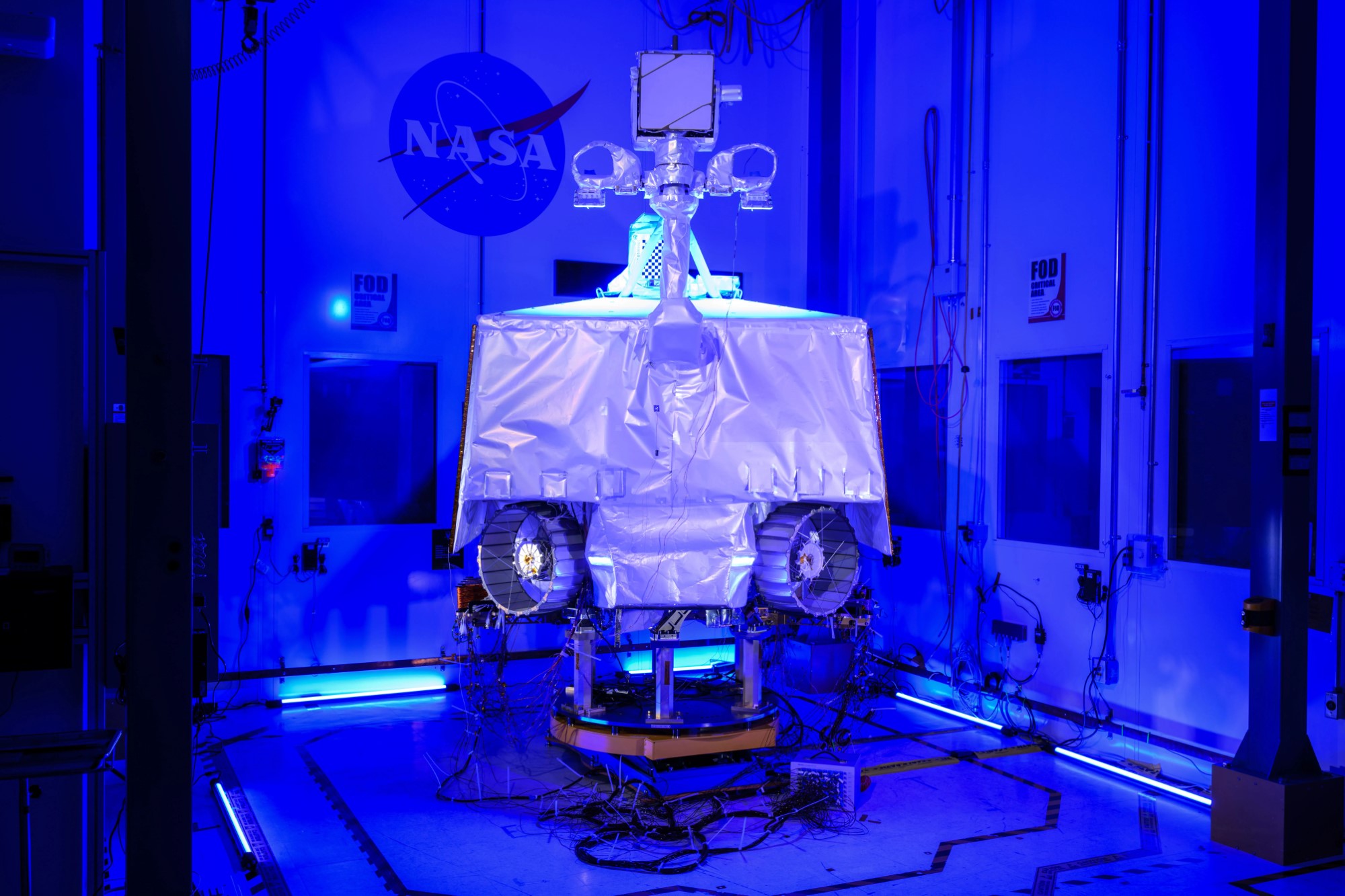

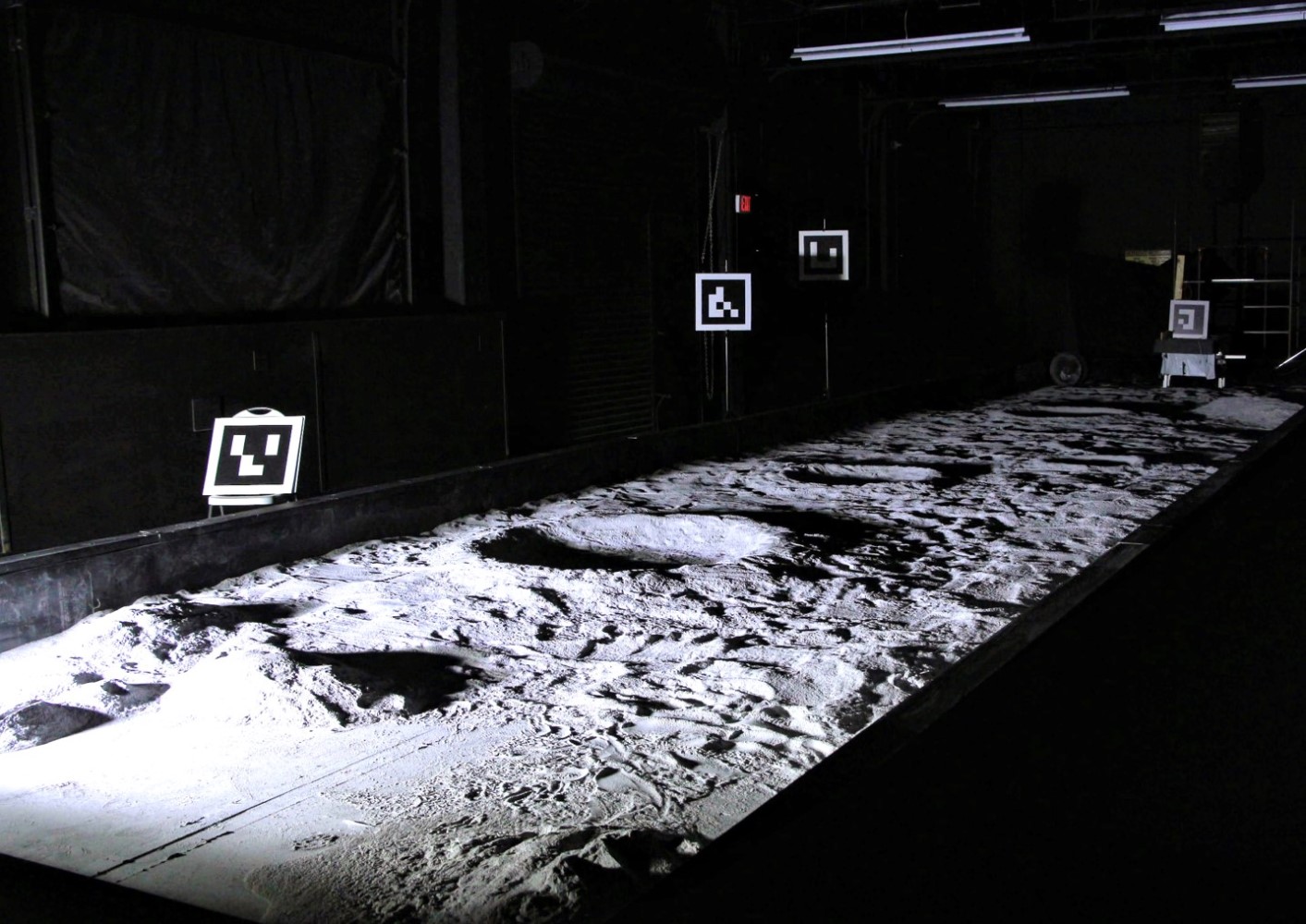

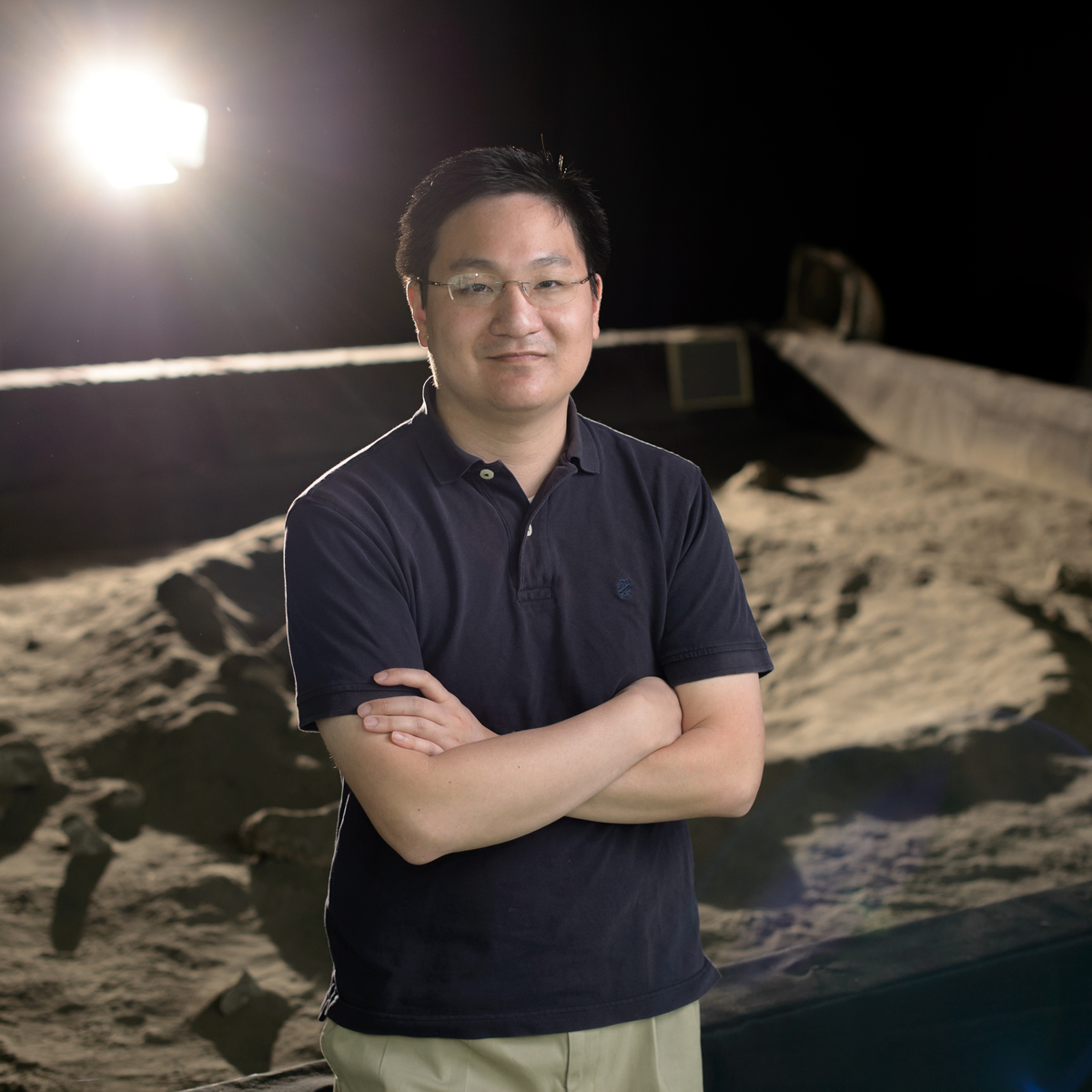

My research seeks to improve the performance of computer vision in extreme environments by understanding and exploiting the physics of light transport. It is well-known that use of active illumination in the form of “structured light” can greatly enhance image understanding; however, accurately modeled natural illumination can also be leveraged in the same manner. My dissertation explored new ways to integrate computer vision, lighting, and photometry to tackle the most challenging perception environments. I have continued this approach in my professional career at NASA by leading navigation efforts on the VIPER and Resource Prospector missions, targeted to the polar regions of the Moon. I also led appearance modeling efforts on several of NASA’s photorealistic simulators for the Moon and Europa.

-

Planetary Skylight and Cave Exploration

Skylights are recently-discovered “holes” on the surface of the Moon and Mars that may lead to planetary caves. These vast, stadium-sized openings represent an unparalleled opportunity to access subterranean spaces on other planets. This research thrust developed robotic mechanisms and operations concepts for exploration of these features. Tyrobot (“Tyrolean Robot”) was developed to map skylight walls and floors by suspending on a cable tightrope. PitCrawler is a wheeled robot which utilizes flexible chassis, extremely low center-of-gravity, and energetic maneuvering to negotiate bouldered floors and sloped descents. We demonstrated these prototypes in analog pit mines which simulated the size and shape of lunar skylights.

-

Sensor Characterization

Many types of imaging and range sensors exist on the market. Manufacturer specifications are often non-comparable, collected in ideal settings, and not oriented to robotics application. The goal of this work was to provide a common basis for empirical comparison of optical sensors in underground environments. Collected performance metrics, such as data distribution and accuracy, are then used to optimize for sensor selection. The work included both an ideal laboratory characterization where a novel 3D “checkerboard” target was scanned from multiple perspectives and also an in situ component where mobile mapping in archetype underground scenes was compared. I helped create and lead the Sensor Characterization Lab at CMU, which successfully fulfilled a DOD contract and other funded work in this area.At NASA Ames, I built the “Lunar Lab” facility and similar technical practice for characterization of sensors used in planetary robotics.

-

Novel Optical Sensors

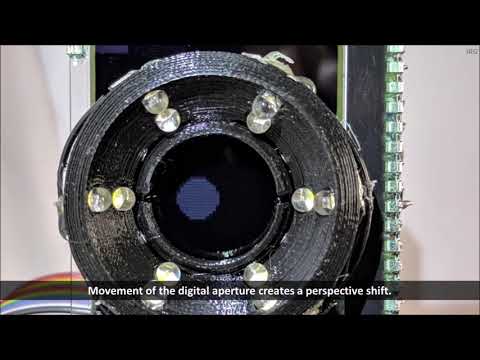

I developed several novel sensors for mapping and imaging. My work on VIPER resulted in the first space-qualified LED lighting system for rover navigation (active stereo). My image-directed structured light scanner optically co-located a stereo camera pair with the output illumination of a DLP projector using a beam splitters. This configuration enables intelligent sample acquisition in the optical domain with high resolution interpolation and texturing. During my thesis, I also built a room-sized gonioreflectometer/sun simulator with no moving parts using an array of commodity SLR cameras and LED illumination. This design was accurate enough to extract BRDFs of planetary materials for rendering purposes at 1/100th the cost of commercial spherical gantries.

Previous Research

-

Multi-sensor Fusion for Navigation and Mapping (2009-2014)

Current robotic maps are limited by decades-old range sensing technology. Only multisensor (LIDAR, camera, RADAR and multispectral) approaches can provide the density and quality of data required for automated inspection, operation and science. My PhD research explored synergistic cooperation of multi-modal optical sensing to enhance understanding of geometry (super-resolution), location of interest sampling (image-directed scanning) and material understanding from source-motion.

-

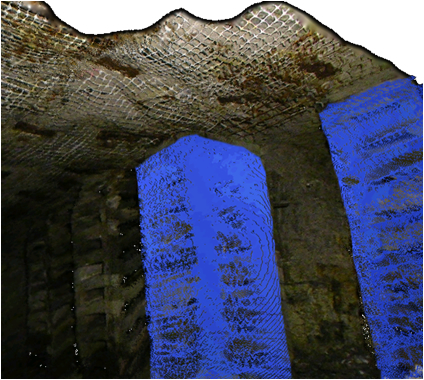

Model Visualization (2009-2014)

Humans are consumers of 3D models for training, oversight, operations and presentation. My research investigated new methods for immersive display that enhanced these tasks. Approaches included non-photorealistic techniques for feature highlighting, point splatting, hole filling for imperfect data, adaptive BRDF selection, radiance estimation and geometry image parameterizations. I later dabbled in 3D printing of robot-made models as tools for science analysis and outreach.

-

Commercial Lunar Robotics (2008-2011)

I supported ongoing research in lunar robotics at CMU. I led automation of RedRover, a prototype equatorial rover designed to win the Google Lunar XPRIZE, and helped develop its stereo mapping capability. More recently, I contributed to the autonomous lunar lander project developing algorithms for terrain modeling and assessment used in mid-flight landing site selection. A combined lander/rover team from CMU and a spinoff company was intended to visit the Lacus Mortis pit on the moon.

-

Subterranean Mapping (2007-2012)

Robots are poised to proliferate in underground civil inspection and mining operations. I took over development of the CaveCrawler mobile robot, which was a platform for research in these areas. CaveCrawler has inspected and mapped many miles of underground mines and tunnels using LIDAR. We also demonstrated use of rescue scout robots for use in disaster situations to locate victims and carry supplies.

-

Borehole Scanning and Imaging (2006-2009)

I developed robots for inspecting hazardous and access-constrained underground environments. The MOSAIC camera is a borehole-deployed inspection robot that generates 360 degree panoramas. MOSAIC captures long-range photography in darkness using active illumination and HDR imaging. Ferret was an underground void inspection robot that was capable of deploying through a 3” drill core into unlined boreholes. It produced 3D models of voids with a fiber-optic LIDAR.

-

Human Odometer (2005)

The Human Odometer was a wearable personal localization system for first responders and warfighters. The key idea is that real-time pose and activity information for each member of a team provides enhanced situational awareness for command and control. Bluetooth accelerometers and gyroscopes integrated into a suit tracked a person’s steps and orientation. This information was reported to a battalion commander through a handheld PDA which brought up context-sensitive mapping and position information. My undergraduate senior thesis investigated Kalman filtering to fuse intermittent IMU, GPS, and pedometry data for more accurate positioning as well as learning parameters and variances of the step-detection model.

-

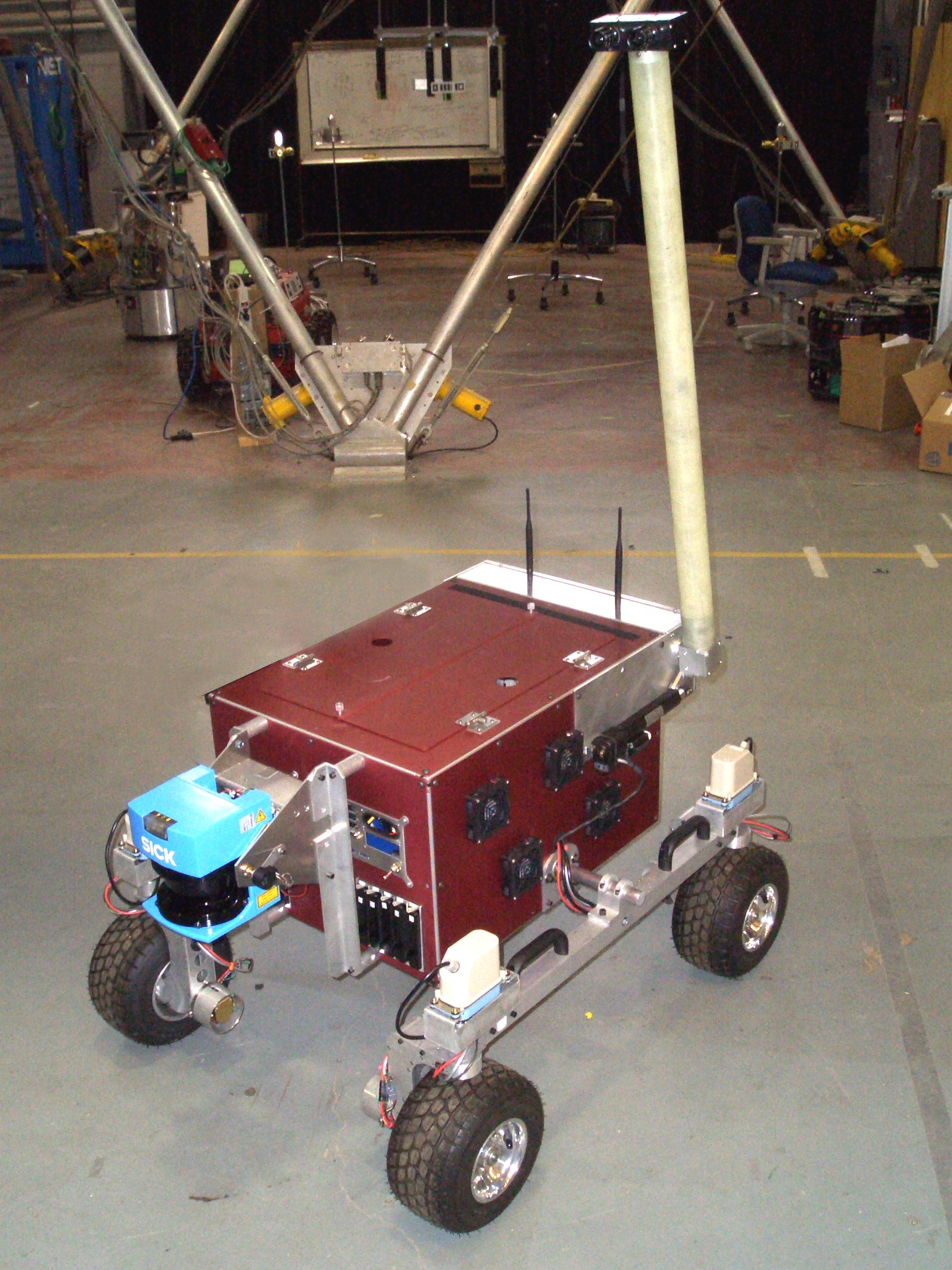

Hazard Detection for Prospecting (2005)

Through an undergrad internship with the Tele-supervised Autonomous Robotics Lab at Carnegie Mellon, I developed a terrain hazard detection system for a multi-robot lunar prospecting (K10 rover) testbed. This included control of a nodding SiCK LIDAR scan mechanism using embedded C, acquisition of range data, and development of PCA-based terrain traversibility analysis algorithms in Matlab.