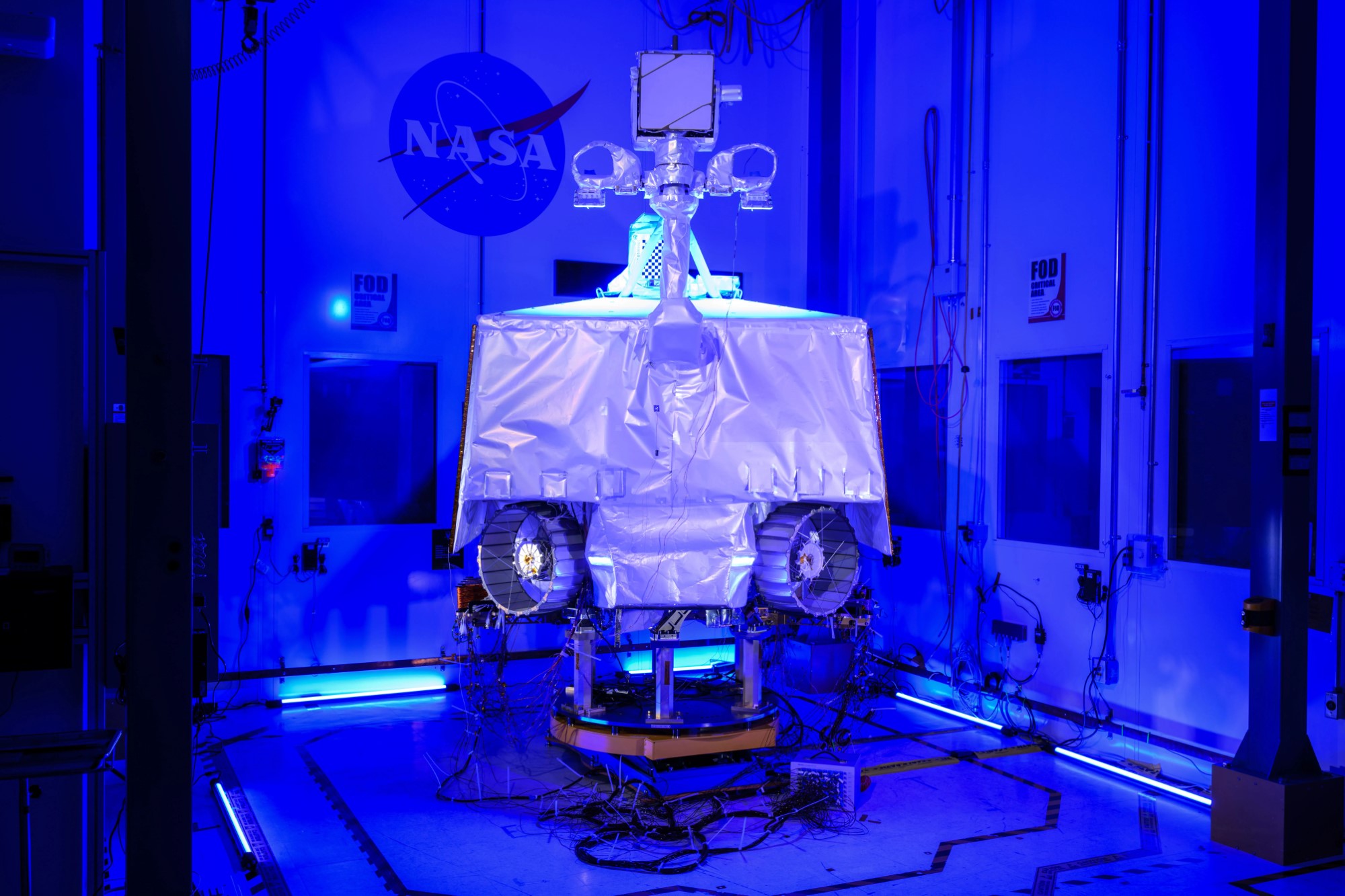

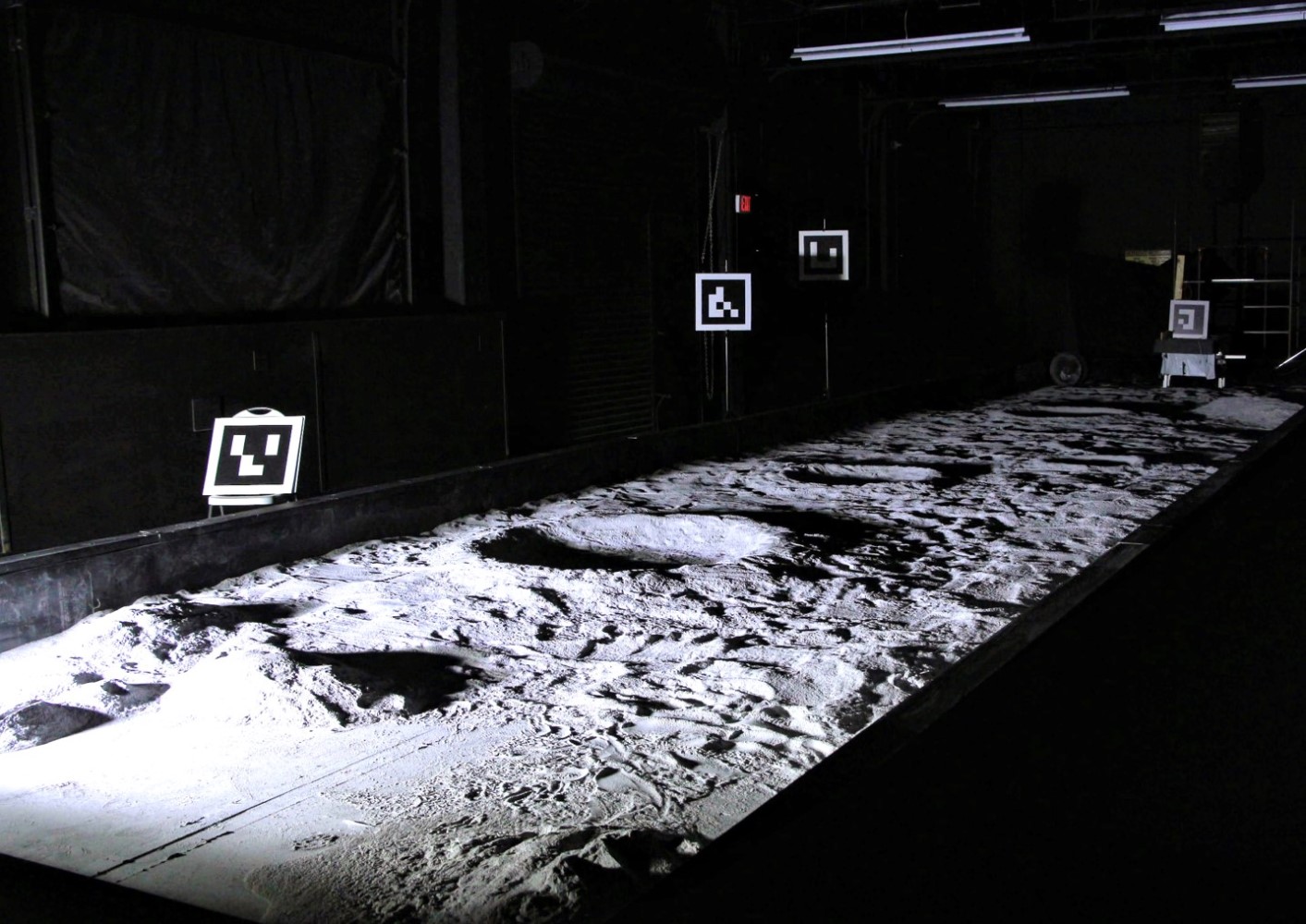

My research seeks to improve the performance of computer vision in extreme environments by understanding and exploiting the physics of light transport. It is well-known that use of active illumination in the form of “structured light” can greatly enhance image understanding; however, accurately modeled natural illumination can also be leveraged in the same manner. My dissertation explored new ways to integrate computer vision, lighting, and photometry to tackle the most challenging perception environments. I have continued this approach in my professional career at NASA by leading navigation efforts on the VIPER and Resource Prospector missions, targeted to the polar regions of the Moon. I also led appearance modeling efforts on several of NASA’s photorealistic simulators for the Moon and Europa.